: Rethinking User Experience

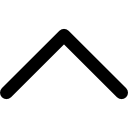

In a collaborative project for my Human Computer Interaction course, we redesigned a health app that was falling short in sharing crucial information to its users. The goal was to transform how the app informed users about the personal health data it collected and utilized.

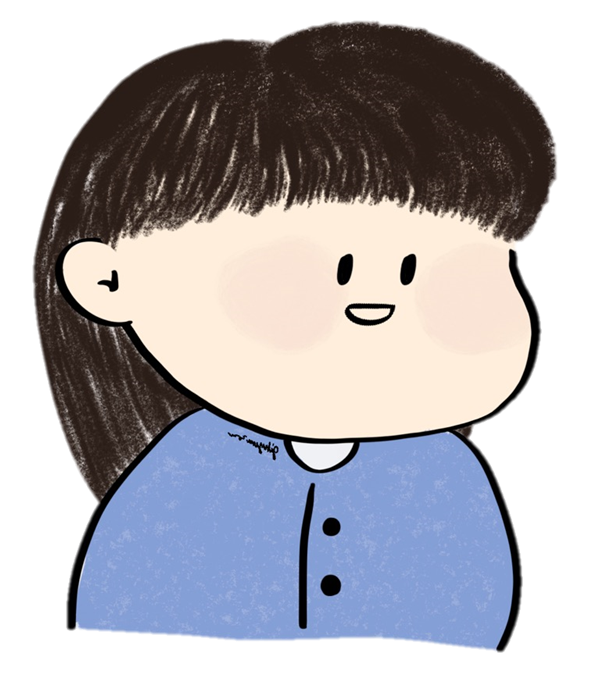

We used Figma to integrate user-friendly features: informative pop-ups that appeared at the moment of need, clear checkboxes for consent, easily recognizable info icons, an accessible FAQ section, and succinct bullet-point summaries replacing lengthy, jargon-filled text.

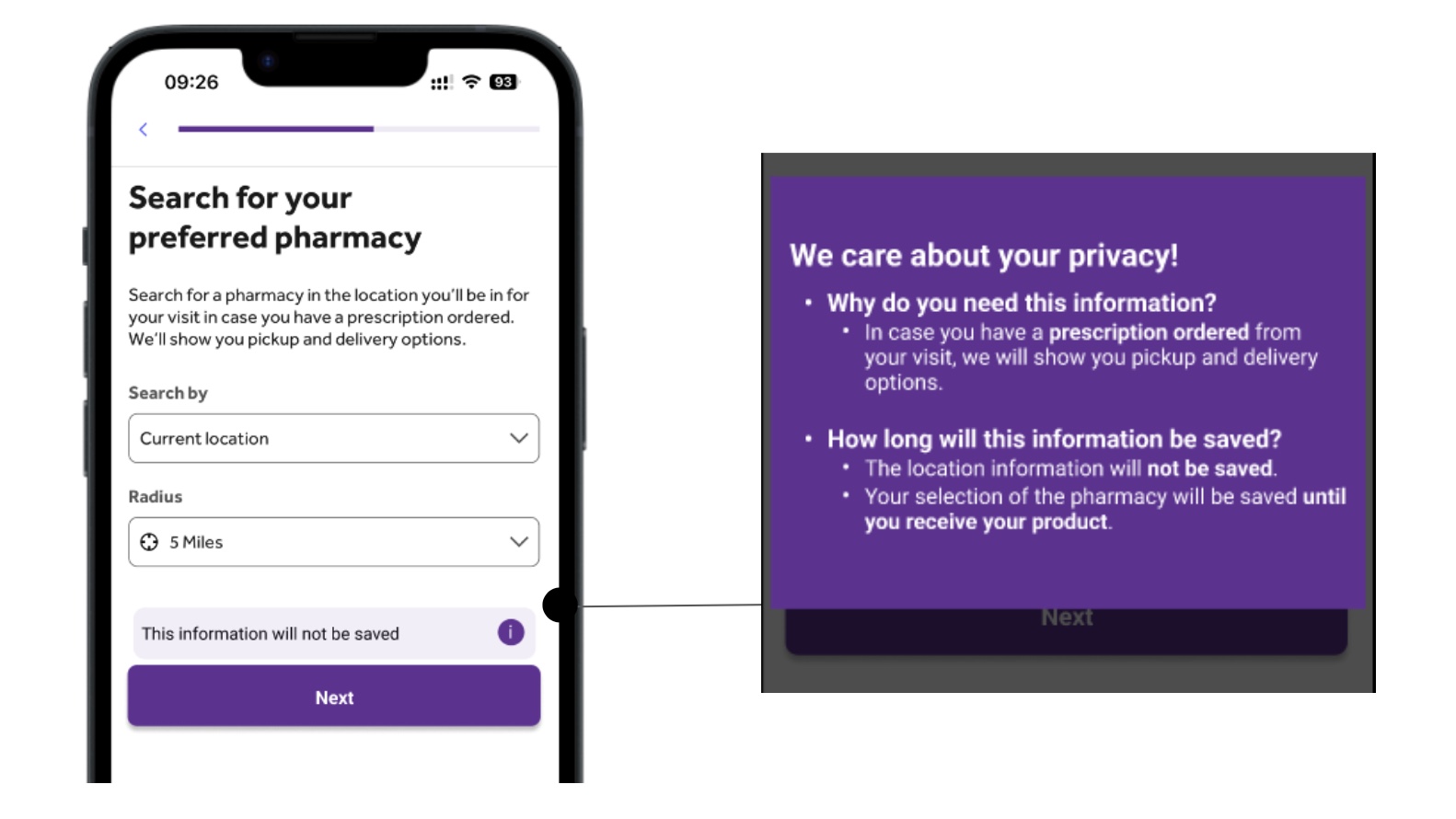

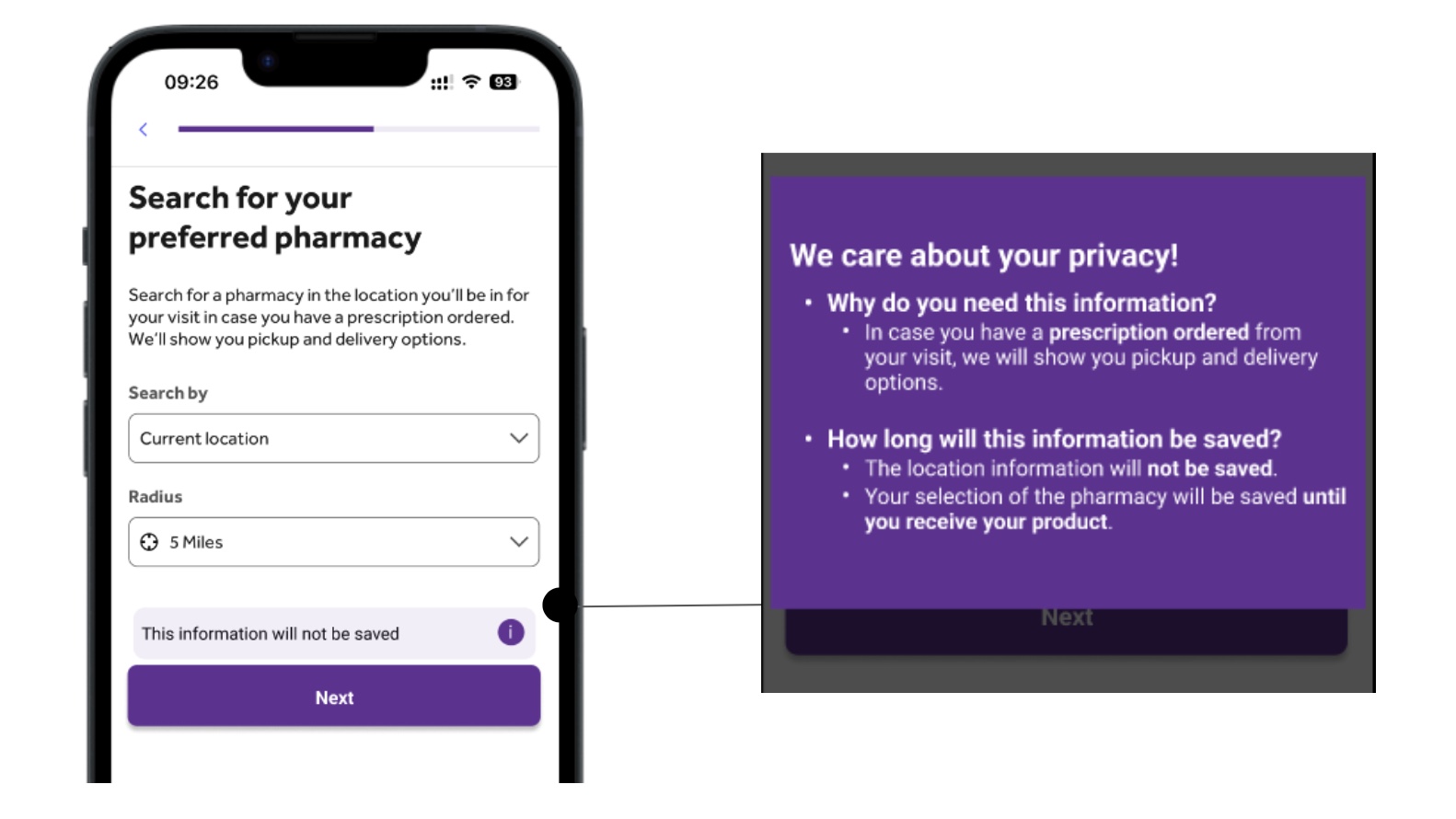

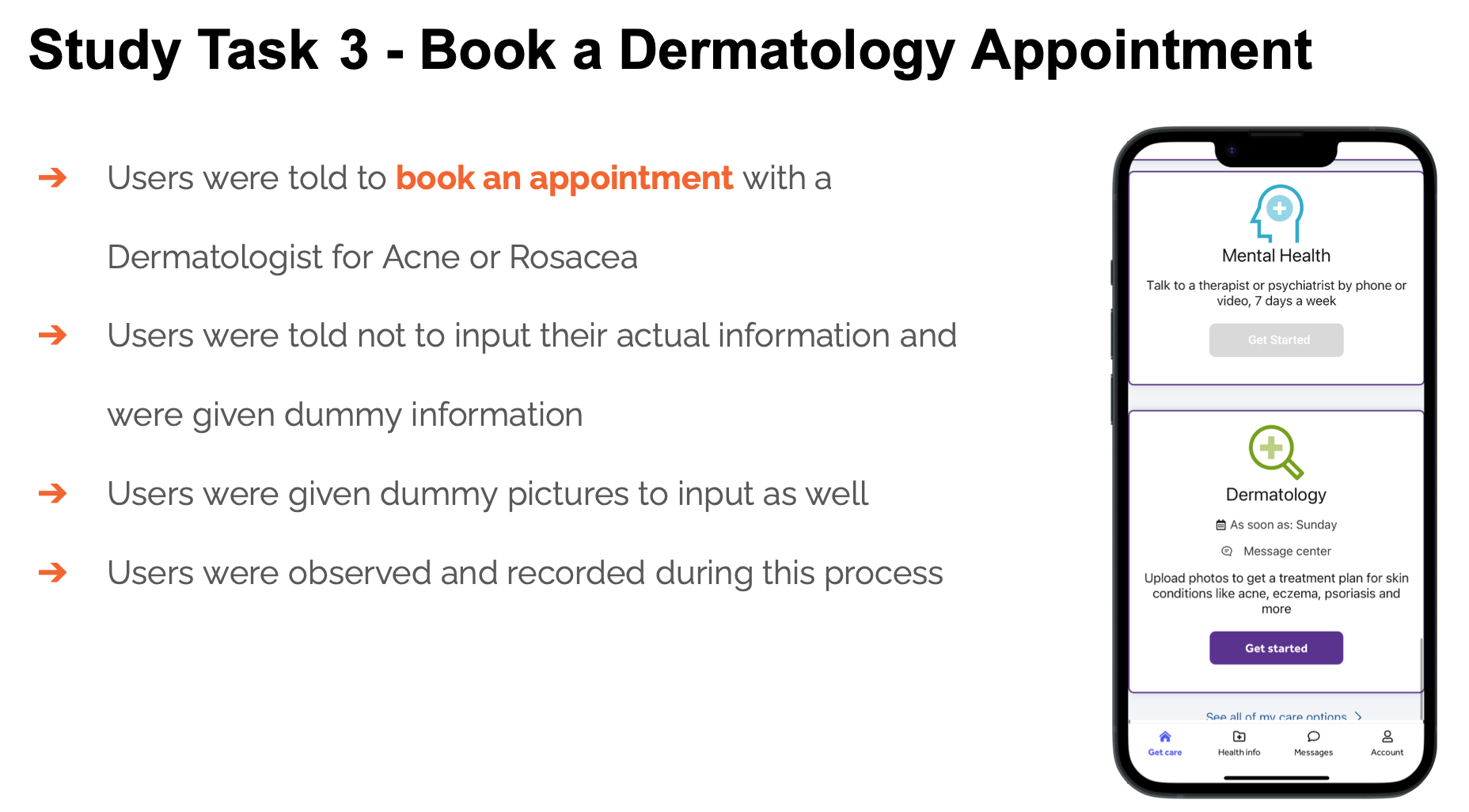

To measure the impact of our redesign, my team and I created and executed a user experience survey with several tasks so that the participants tries the app. We meticulously crafted a blend of qualitative and quantitative questions, including ones that tested the user's actual understanding of the information provided by both the original and redesigned app, and their experiences with both apps.

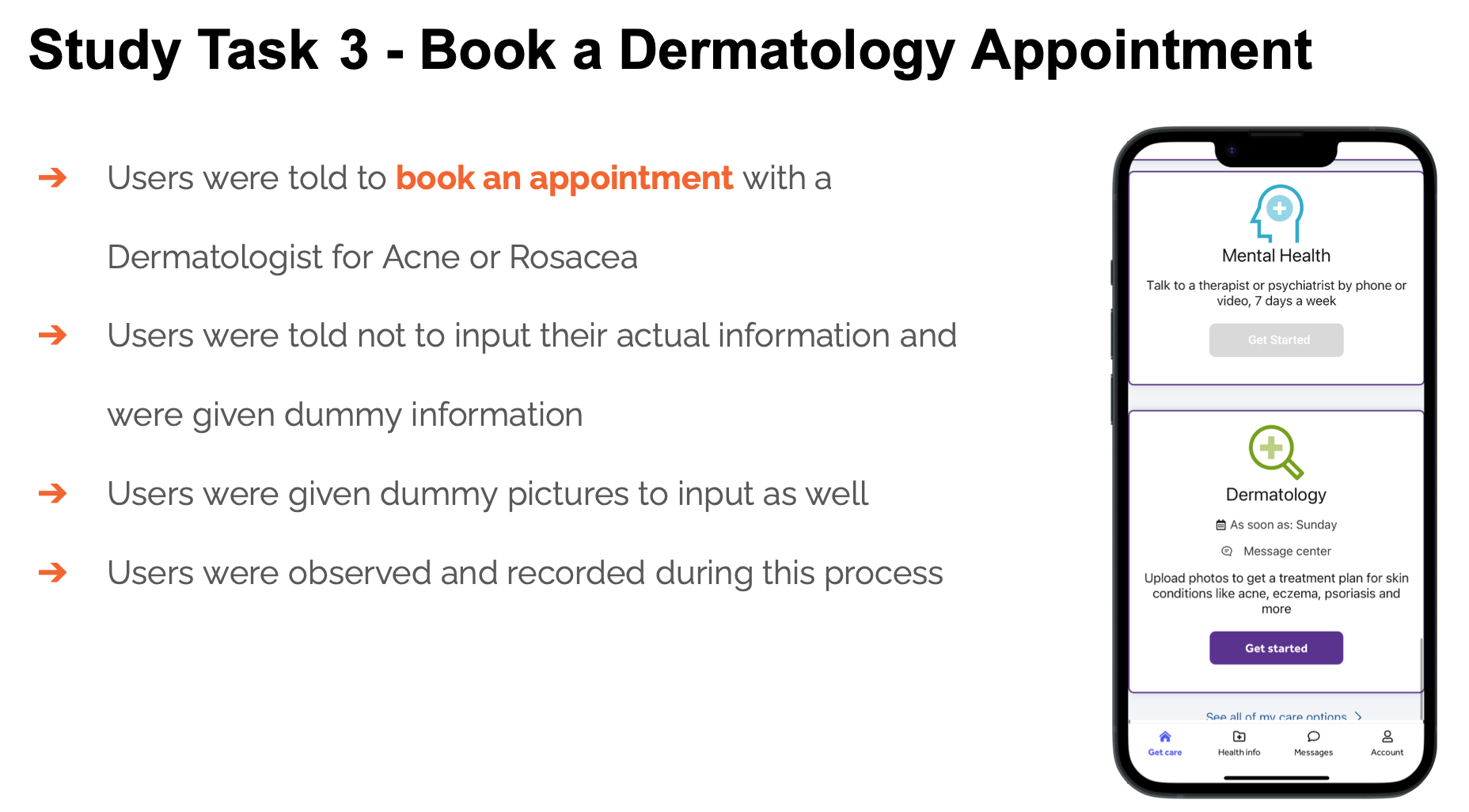

Our analysis revealed that while we had significantly improved users' understanding of the health information storage and usage policies, there was a nuanced distinction between understanding and awareness. This key finding showed the complexity of user engagement in reading privacy informations and the need for persistence in design.

Top

Top

: Rethinking User Experience

In a collaborative project for my Human Computer Interaction course, we redesigned a health app that was falling short in sharing crucial information to its users. The goal was to transform how the app informed users about the personal health data it collected and utilized.

We used Figma to integrate user-friendly features: informative pop-ups that appeared at the moment of need, clear checkboxes for consent, easily recognizable info icons, an accessible FAQ section, and succinct bullet-point summaries replacing lengthy, jargon-filled text.

To measure the impact of our redesign, my team and I created and executed a user experience survey with several tasks so that the participants tries the app. We meticulously crafted a blend of qualitative and quantitative questions, including ones that tested the user's actual understanding of the information provided by both the original and redesigned app, and their experiences with both apps.

Our analysis revealed that while we had significantly improved users' understanding of the health information storage and usage policies, there was a nuanced distinction between understanding and awareness. This key finding showed the complexity of user engagement in reading privacy informations and the need for persistence in design.

Top

Top

In our Machine Learning course, my team explored two MNIST datasets using various machine learning techniques. Our primary goal was to identify the most effective classifier and parameter settings for optimal data classification. My role in this project was focusing on in-depth experimentation of Decision Tree, Random Forest, and Boosting for analysis to uncover the best solutions.

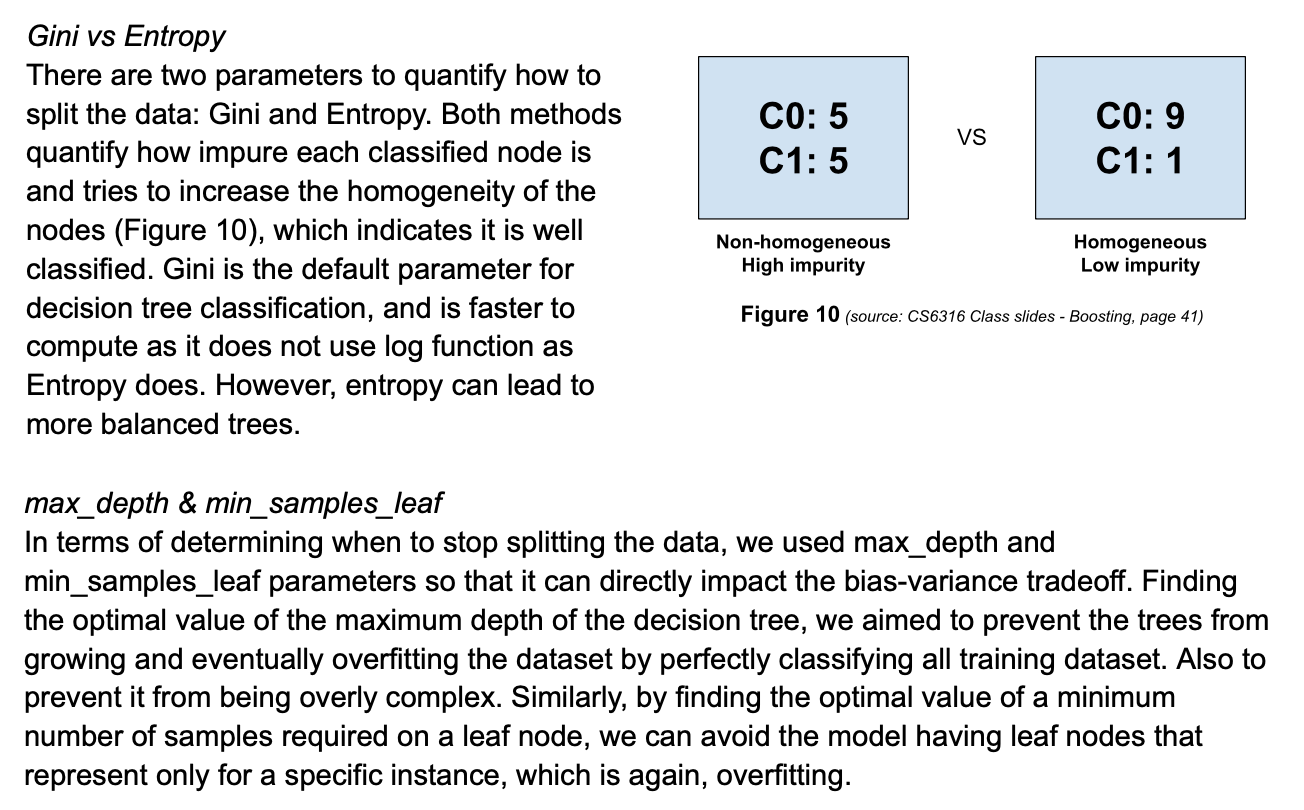

I began by delving into the Decision Tree classifier, comparing different parameter combinations such as Gini index vs. Entropy, along with max_depth and min_samples_leaf, to ascertain the optimal setup through cross-validation.

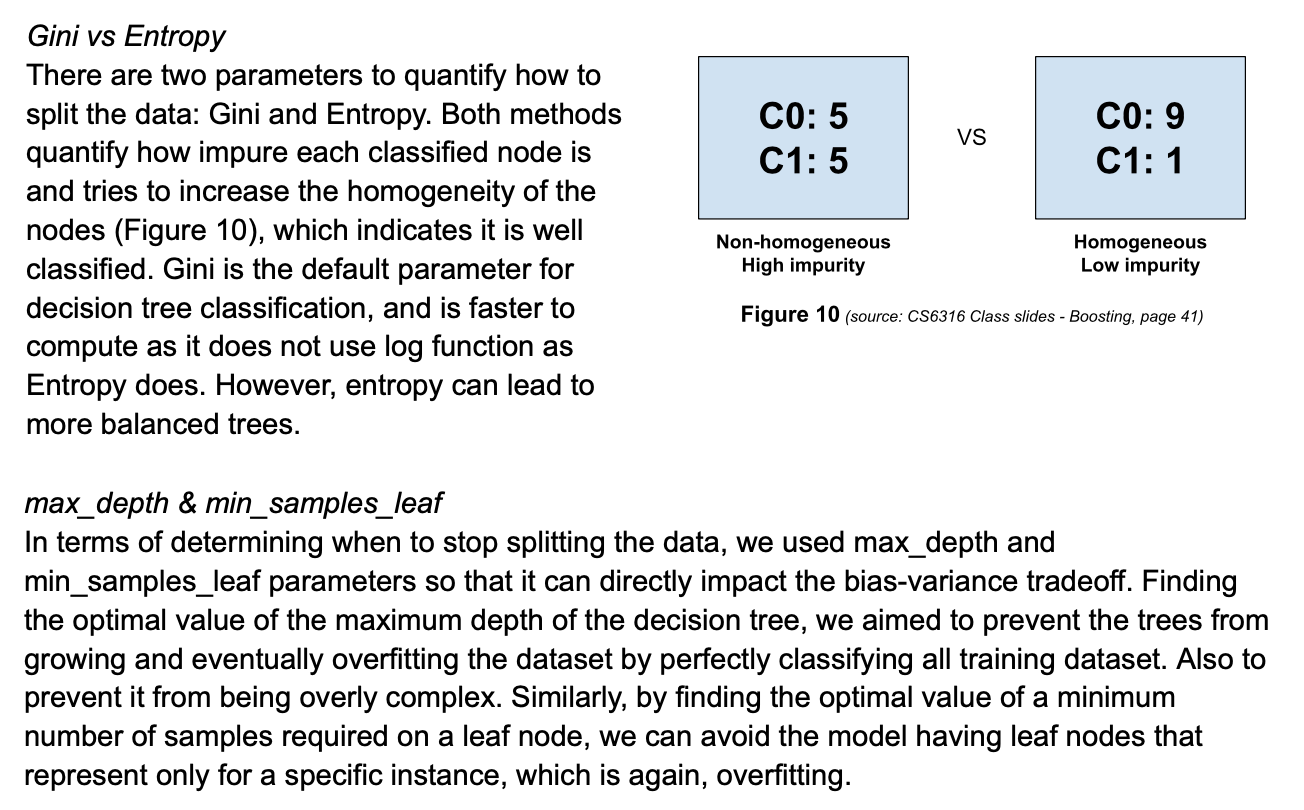

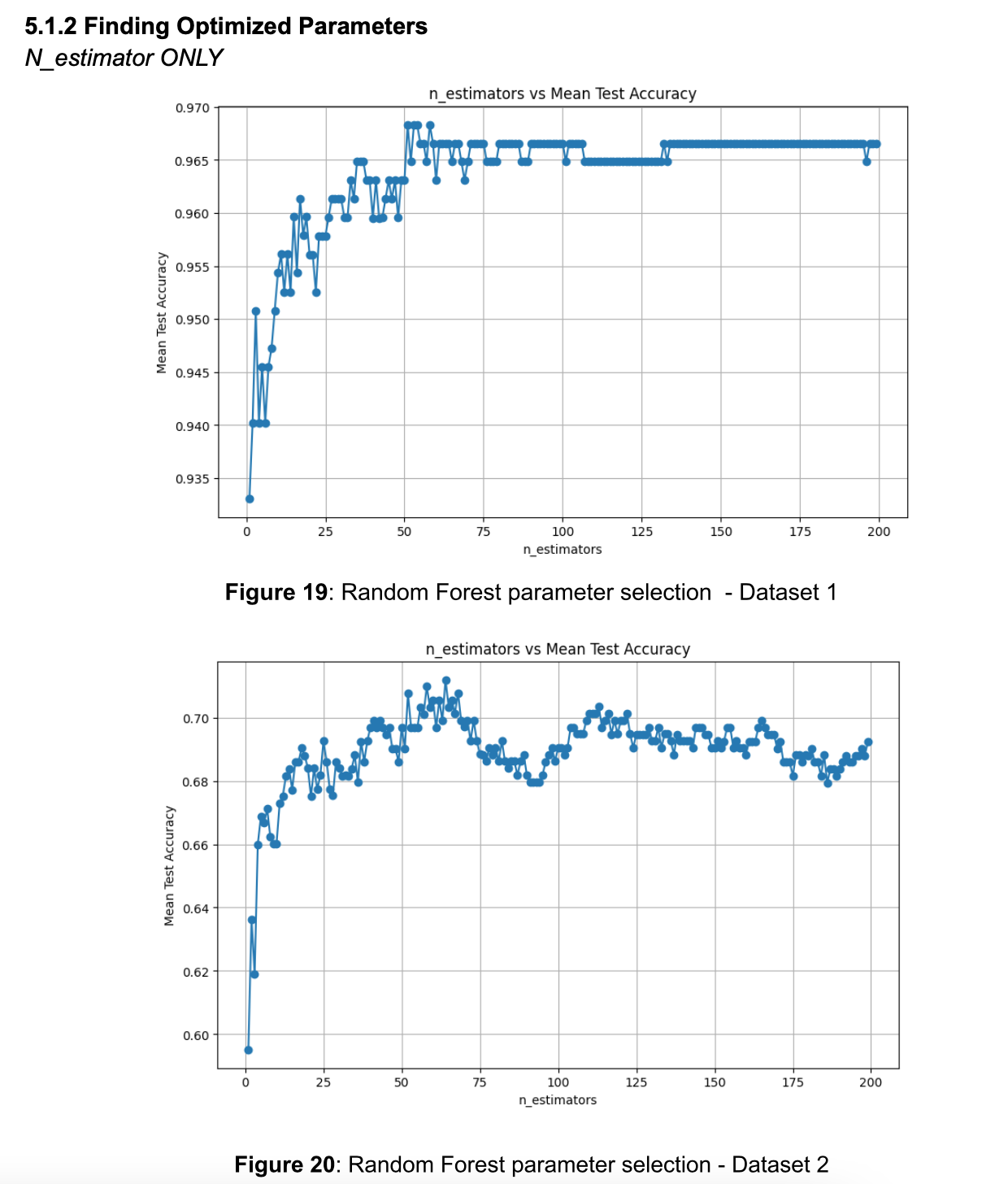

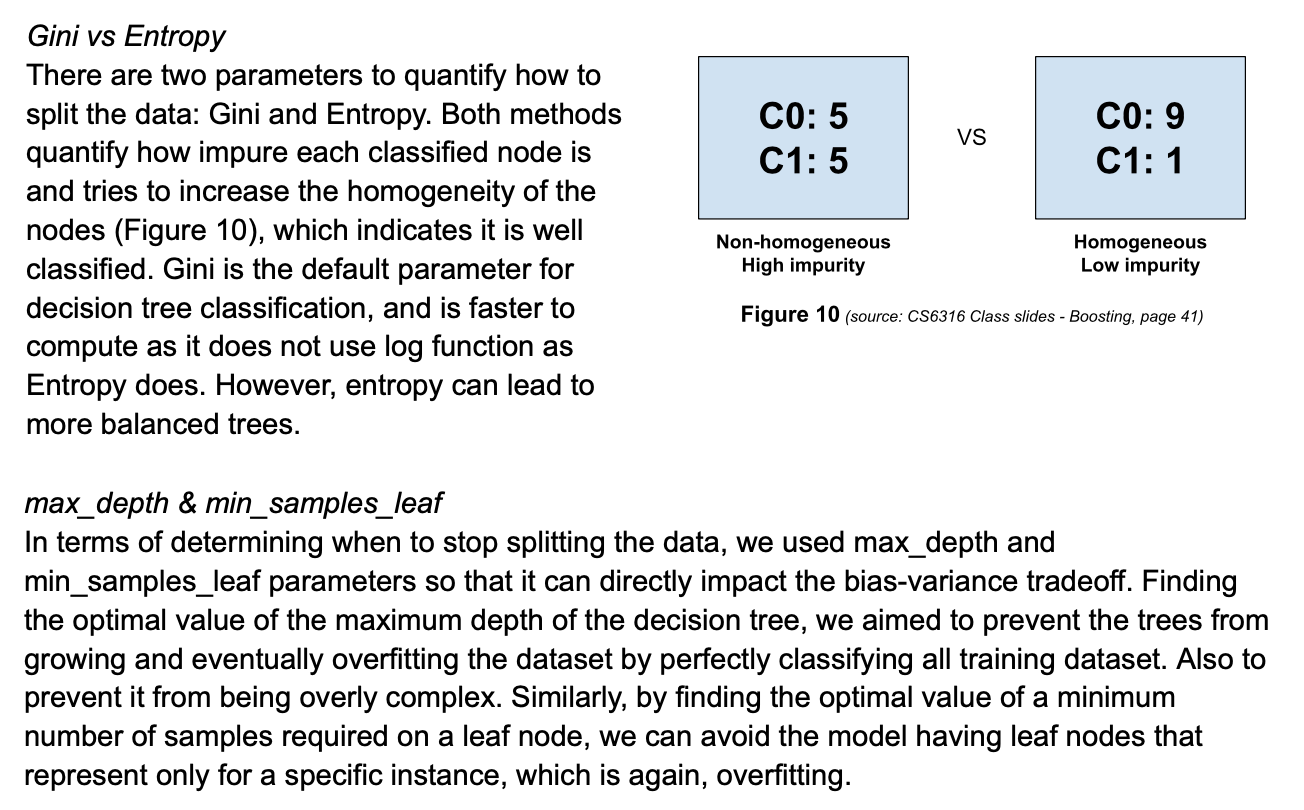

Building on this, I expanded my exploration to the Random Forest classifier, analyzing the effects of n_estimators and max_depth on model performance.

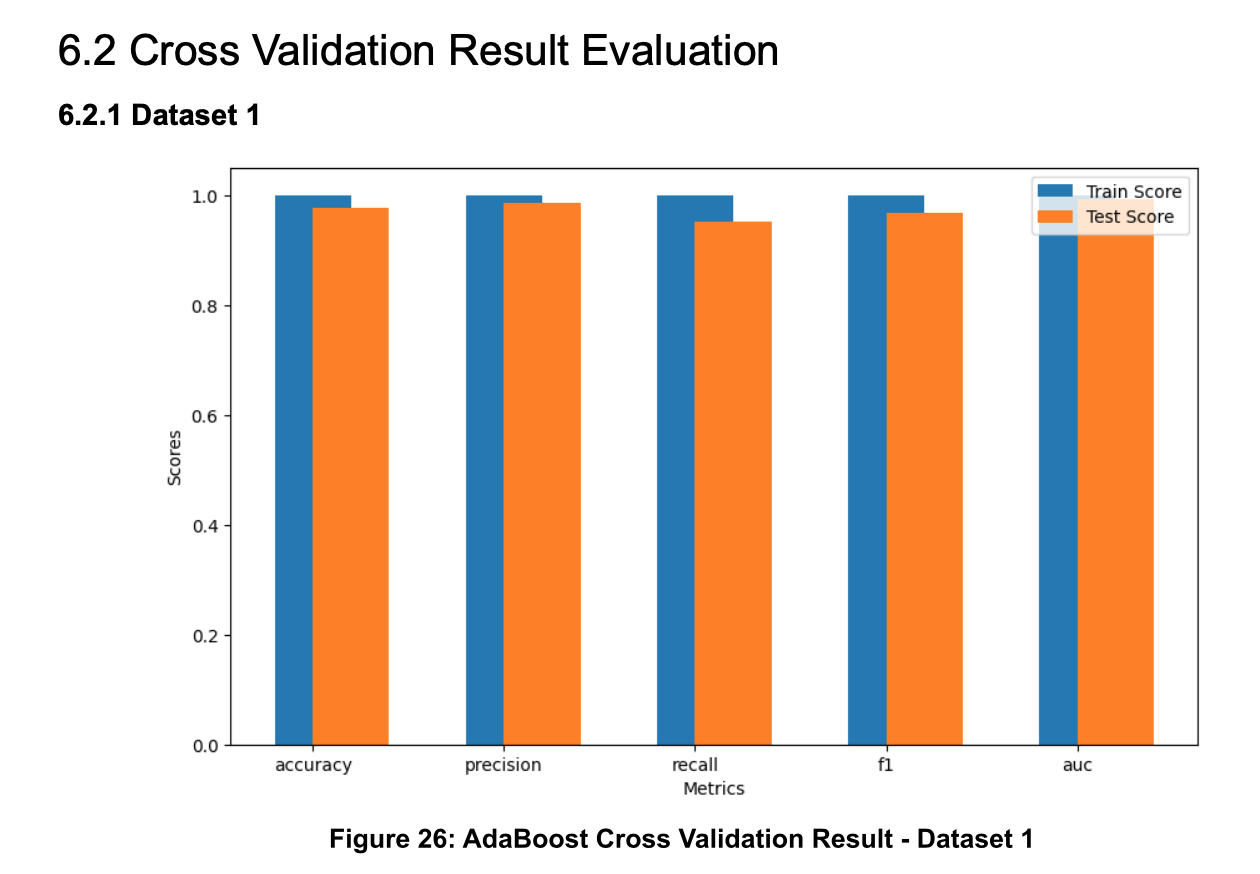

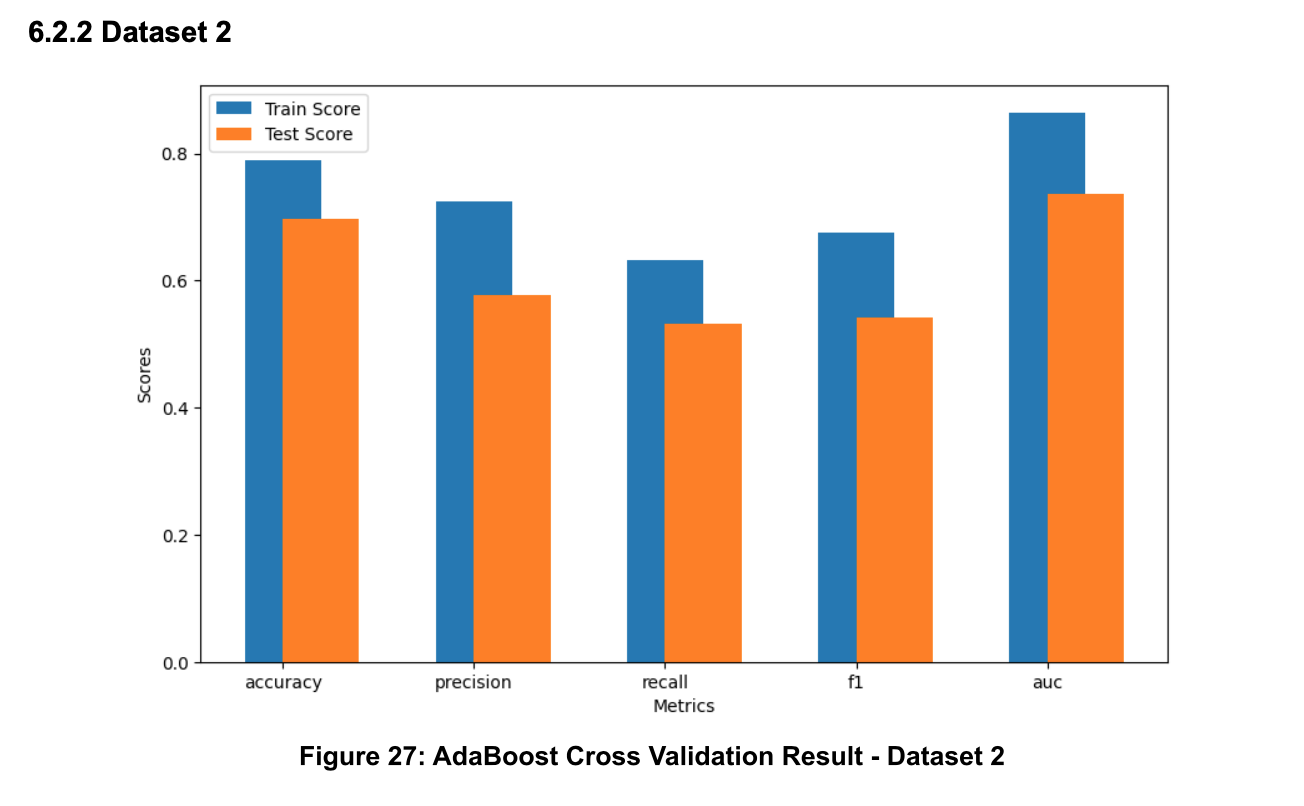

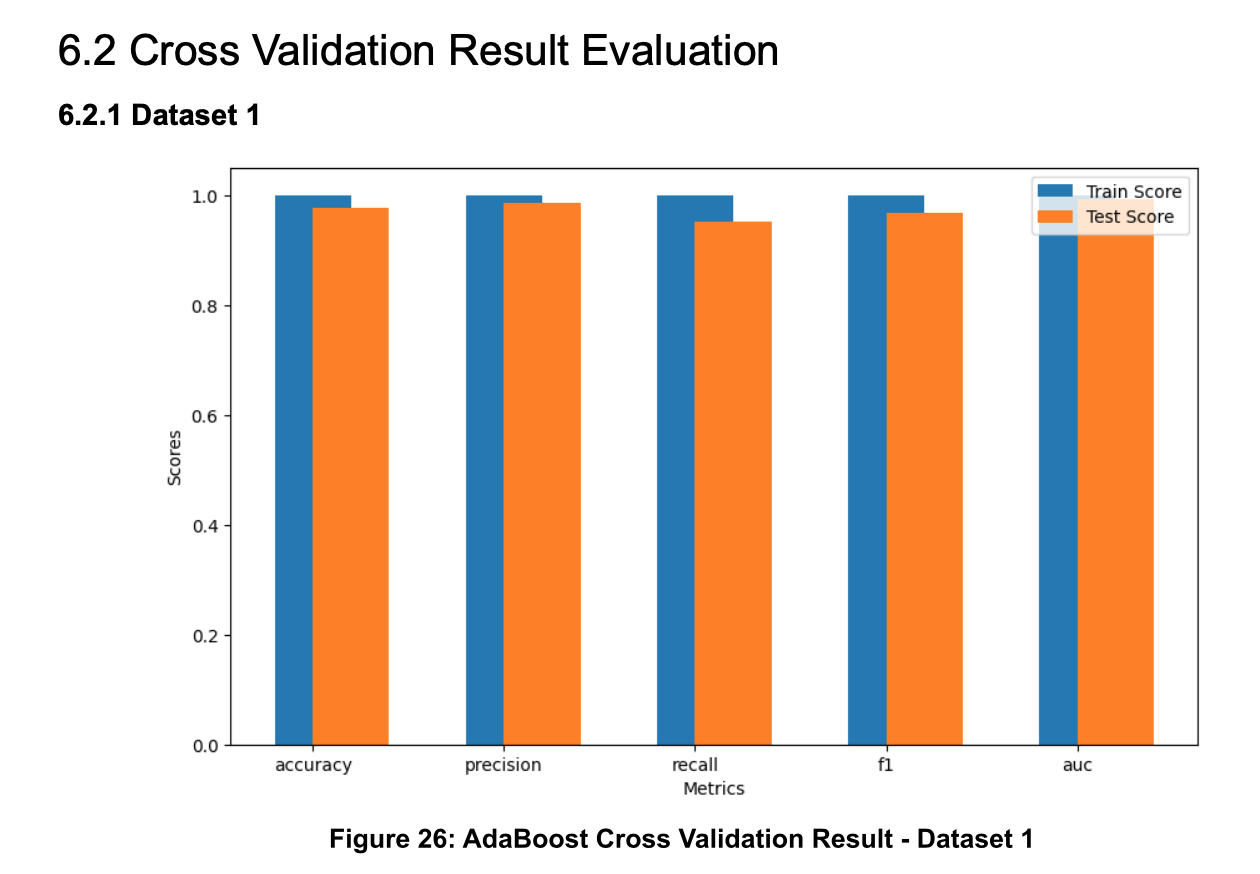

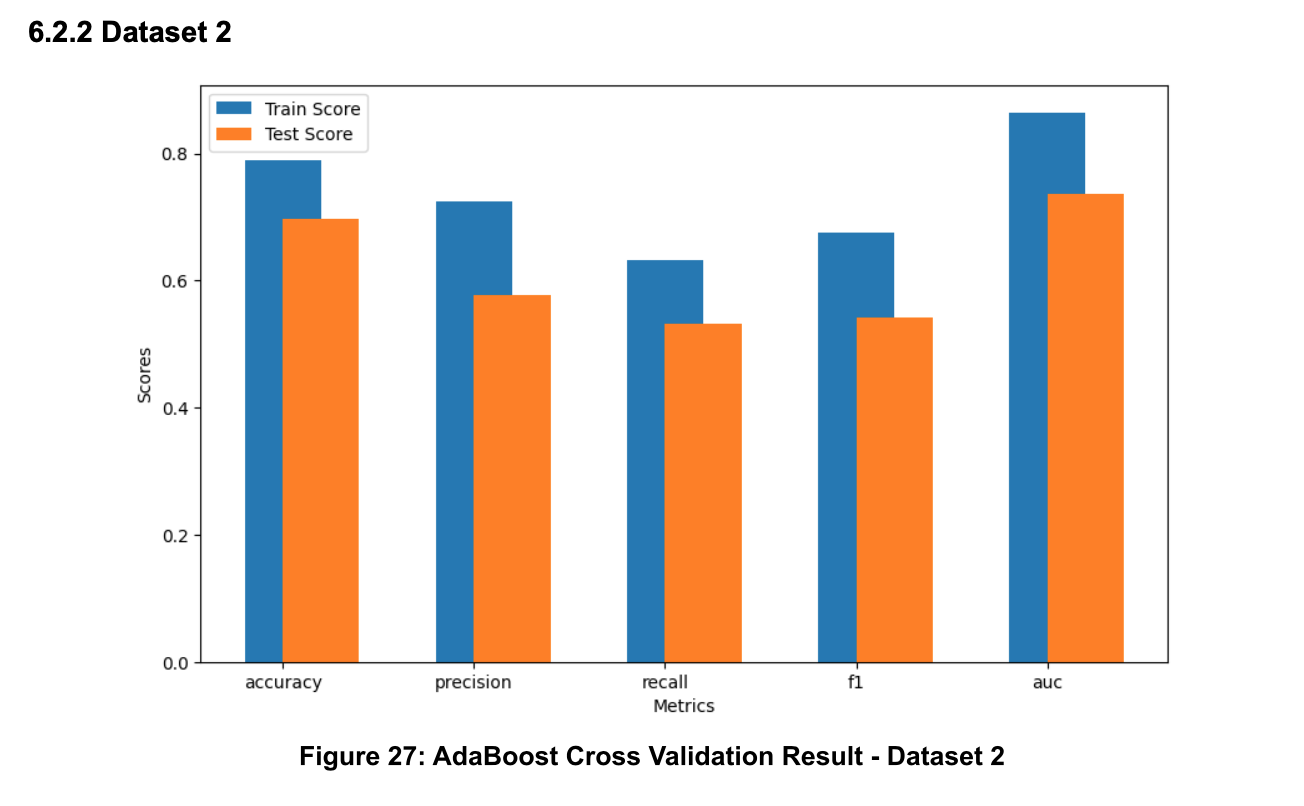

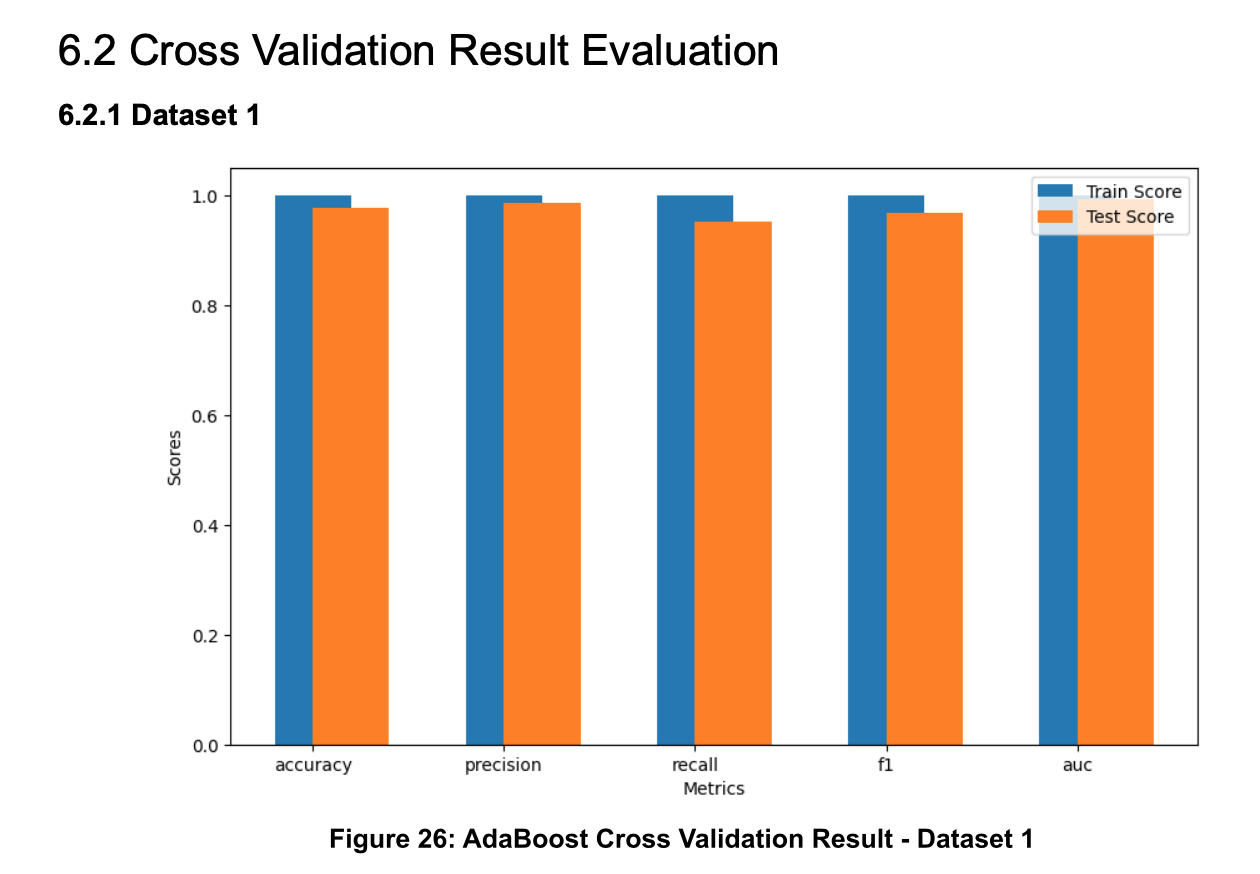

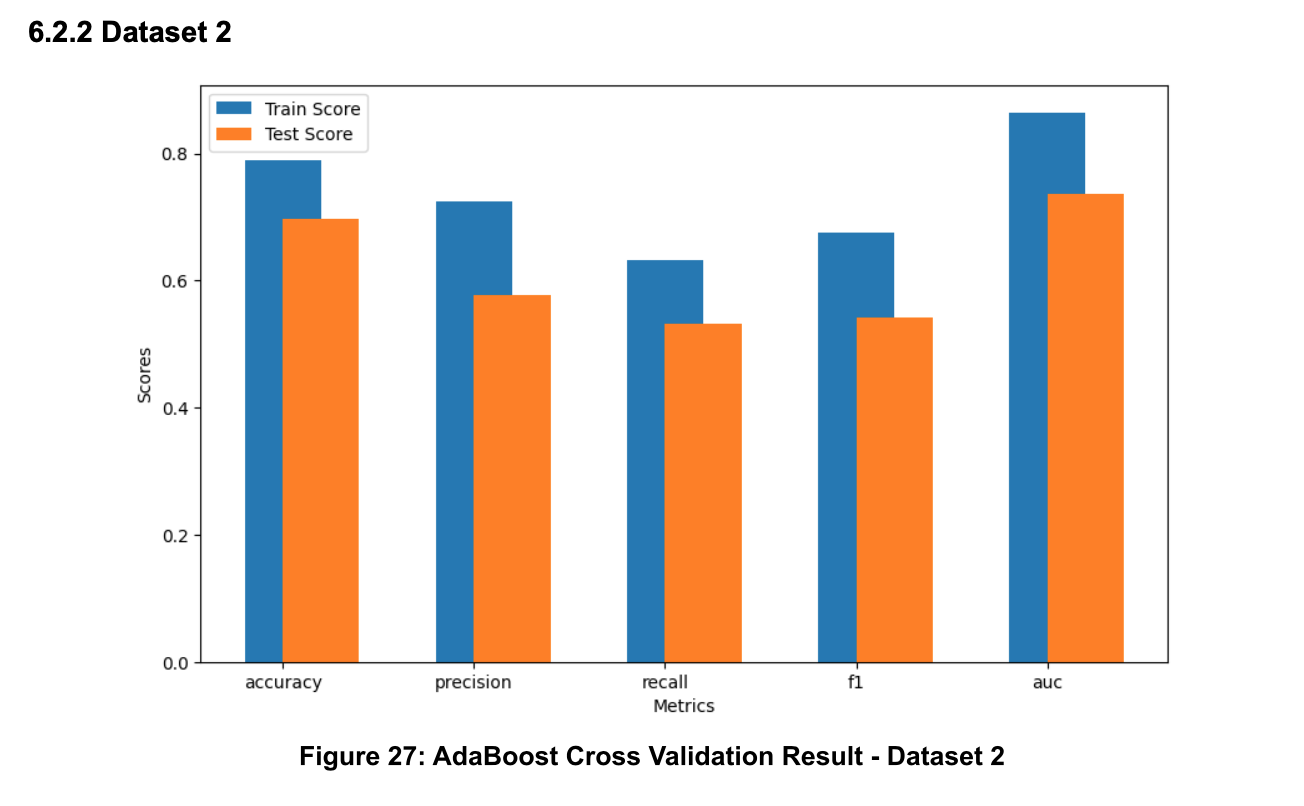

The evaluation of classifier performance was comprehensive, based on a 10-fold Cross Validation in terms of accuracy, precision, recall, F1 score, and AUC to ensure a holistic understanding of each model's strengths and weaknesses.

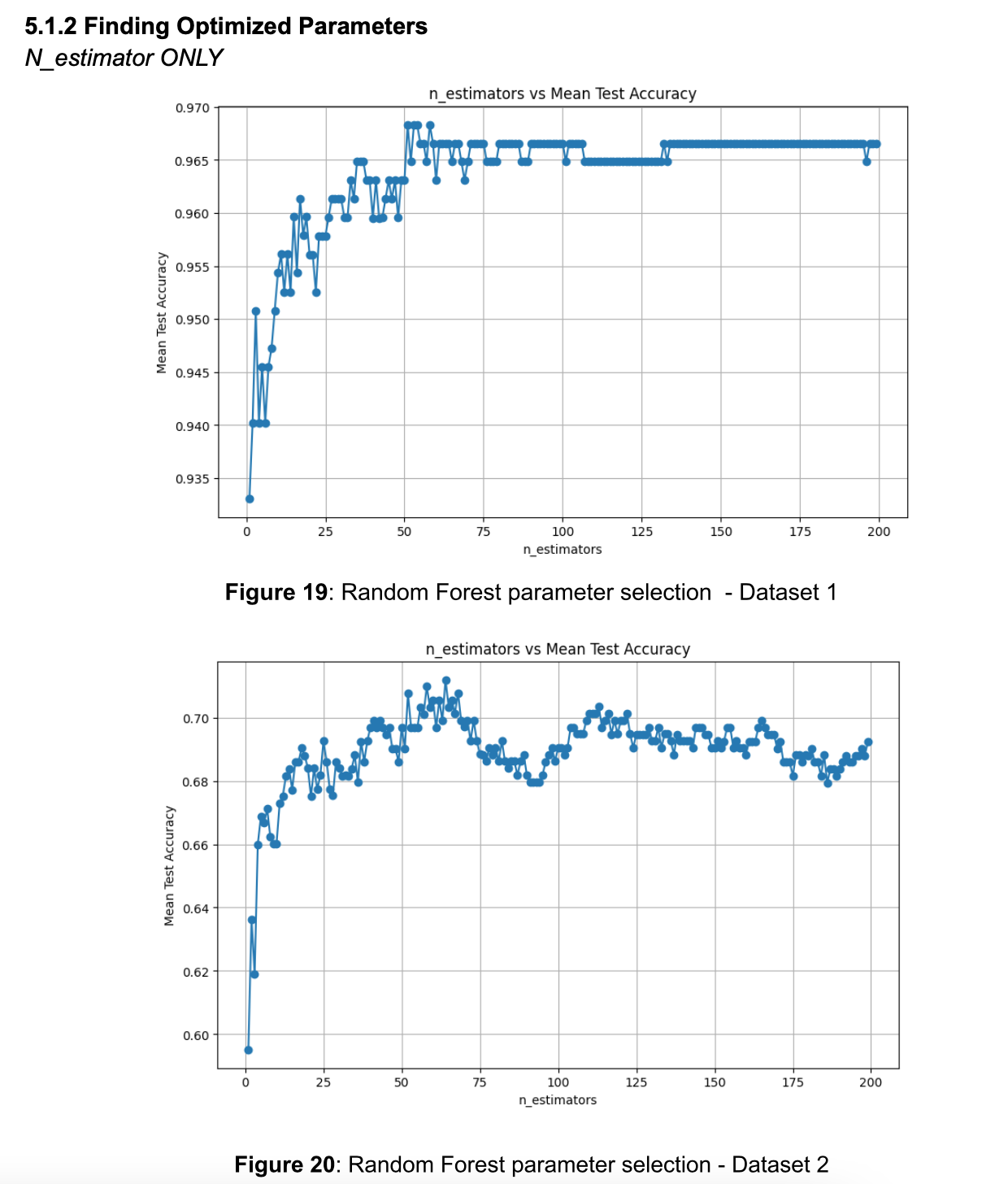

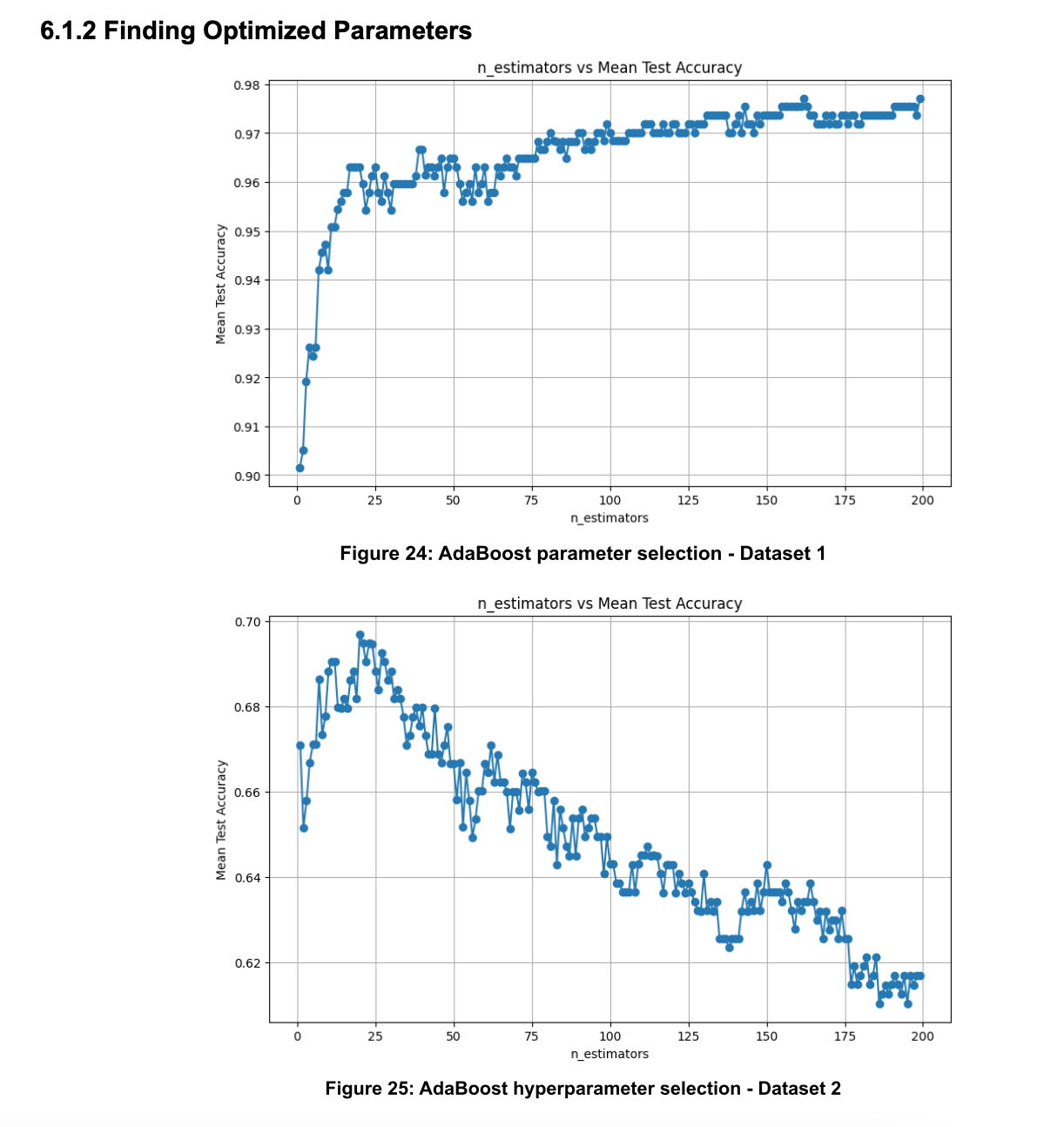

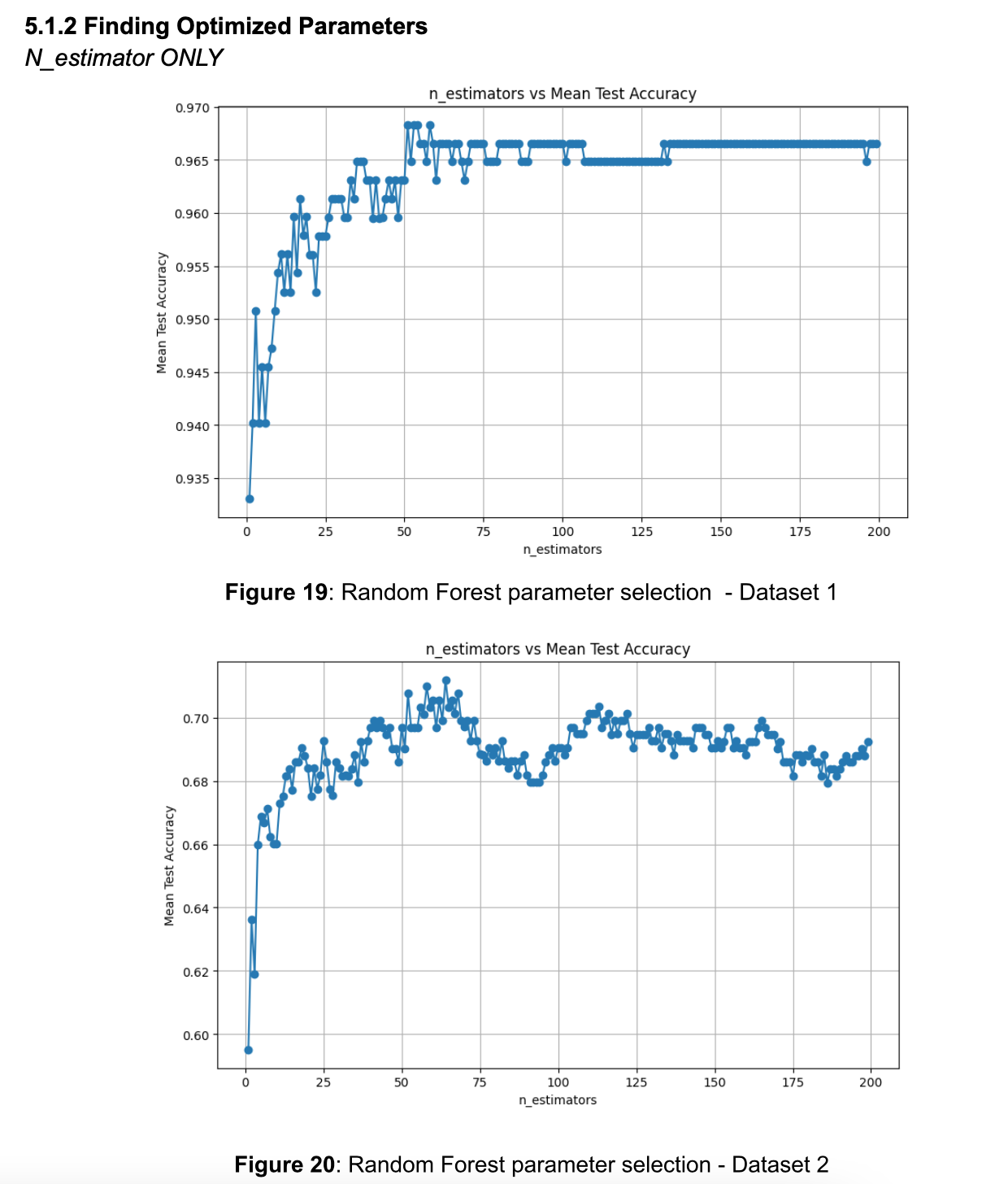

Moreover, I experimented with AdaBoost, a technique known for its ability to integrate multiple weak learners into a formidable ensemble model. This approach was particularly intriguing due to its inherent resistance to overfitting, a challenge I noted in both Decision Tree and Random Forest models under certain conditions.

Through iterative experimentation, especially with the n_estimators parameter in AdaBoost, I found valuable insights into the characteristics of the datasets. Notably, the simplicity of dataset 1's patterns, which were effectively captured by a minimal number of weak learners before showing signs of overfitting.

This project was not just an exercise in applying machine learning algorithms; it was a deep dive into the nuanced interplay between classifier parameters and dataset characteristics, enriching my understanding of machine learning's practical applications and theoretical underpinnings.

Top

Top

In our Machine Learning course, my team explored two MNIST datasets using various machine learning techniques. Our primary goal was to identify the most effective classifier and parameter settings for optimal data classification. My role in this project was focusing on in-depth experimentation of Decision Tree, Random Forest, and Boosting for analysis to uncover the best solutions.

I began by delving into the Decision Tree classifier, comparing different parameter combinations such as Gini index vs. Entropy, along with max_depth and min_samples_leaf, to ascertain the optimal setup through cross-validation.

Building on this, I expanded my exploration to the Random Forest classifier, analyzing the effects of n_estimators and max_depth on model performance.

The evaluation of classifier performance was comprehensive, based on a 10-fold Cross Validation in terms of accuracy, precision, recall, F1 score, and AUC to ensure a holistic understanding of each model's strengths and weaknesses.

Moreover, I experimented with AdaBoost, a technique known for its ability to integrate multiple weak learners into a formidable ensemble model. This approach was particularly intriguing due to its inherent resistance to overfitting, a challenge I noted in both Decision Tree and Random Forest models under certain conditions.

Through iterative experimentation, especially with the n_estimators parameter in AdaBoost, I found valuable insights into the characteristics of the datasets. Notably, the simplicity of dataset 1's patterns, which were effectively captured by a minimal number of weak learners before showing signs of overfitting.

This project was not just an exercise in applying machine learning algorithms; it was a deep dive into the nuanced interplay between classifier parameters and dataset characteristics, enriching my understanding of machine learning's practical applications and theoretical underpinnings.

Top

Top

In our Machine Learning course, my team explored two MNIST datasets using various machine learning techniques. Our primary goal was to identify the most effective classifier and parameter settings for optimal data classification. My role in this project was focusing on in-depth experimentation of Decision Tree, Random Forest, and Boosting for analysis to uncover the best solutions.

I began by delving into the Decision Tree classifier, comparing different parameter combinations such as Gini index vs. Entropy, along with max_depth and min_samples_leaf, to ascertain the optimal setup through cross-validation.

Building on this, I expanded my exploration to the Random Forest classifier, analyzing the effects of n_estimators and max_depth on model performance.

The evaluation of classifier performance was comprehensive, based on a 10-fold Cross Validation in terms of accuracy, precision, recall, F1 score, and AUC to ensure a holistic understanding of each model's strengths and weaknesses.

Moreover, I experimented with AdaBoost, a technique known for its ability to integrate multiple weak learners into a formidable ensemble model. This approach was particularly intriguing due to its inherent resistance to overfitting, a challenge I noted in both Decision Tree and Random Forest models under certain conditions.

Through iterative experimentation, especially with the n_estimators parameter in AdaBoost, I found valuable insights into the characteristics of the datasets. Notably, the simplicity of dataset 1's patterns, which were effectively captured by a minimal number of weak learners before showing signs of overfitting.

This project was not just an exercise in applying machine learning algorithms; it was a deep dive into the nuanced interplay between classifier parameters and dataset characteristics, enriching my understanding of machine learning's practical applications and theoretical underpinnings.

Top

Top

In Computational Behavior Modeling course, my team and I aimed at finding the efficiency of state-of-the-art multi-agent pathfinding and pickup and delivery systems. Our work was centered around a comprehensive comparison and optimization of state-of-the-art multi-agent algorithms, including the TK algorithm, Reinforcement Learning, and a simple a* algorithm, all tailored to a specifically designed environment to meet our project's objectives.

I spearheaded the setting of the default environment, laying a solid foundation for our experiments. The environments as presented below, are representing small storages with narrow paths along the aisles, such as libraries and small stores. I took on the challenge of modifying the updated Token Passing algorithm to better fit our designated environment, conducting thorough tests on different parameters to identify the configurations that outperformed our baseline measures.

Token Passing algorithms on 4 Environments

The results included detailed analysis and comparison of the TK algorithm against other algorithms we had implemented, highlighting the nuanced performances and offering insights into their operational efficiencies.

Interestingly, Reinforcement Learning did not stand out as we first expected it to be. This was due to the complexity of narrow paths, which was not suitable for the robots to learn, therefore successful training required extensive amount of time. Rather, Token Passing algorithms easily found out the path to achieve pickup and delivery tasks, avoid collision based on hard-coded conditions on waiting and taking detours

This project honed my problem-specification skills, allowing me to translate complex problem scenarios into actionable simulation steps and conditions.

Adapting various algorithms to a unified testing environment presented significant initial challenges, yet through persistent experimentation, I gained a deep understanding of the mathematical principles driving algorithm performance under specific conditions.

Top

Top

In Computational Behavior Modeling course, my team and I aimed at finding the efficiency of state-of-the-art multi-agent pathfinding and pickup and delivery systems. Our work was centered around a comprehensive comparison and optimization of state-of-the-art multi-agent algorithms, including the TK algorithm, Reinforcement Learning, and a simple a* algorithm, all tailored to a specifically designed environment to meet our project's objectives.

I spearheaded the setting of the default environment, laying a solid foundation for our experiments. The environments as presented below, are representing small storages with narrow paths along the aisles, such as libraries and small stores. I took on the challenge of modifying the updated Token Passing algorithm to better fit our designated environment, conducting thorough tests on different parameters to identify the configurations that outperformed our baseline measures.

Token Passing algorithms on 4 Environments

The results included detailed analysis and comparison of the TK algorithm against other algorithms we had implemented, highlighting the nuanced performances and offering insights into their operational efficiencies.

Interestingly, Reinforcement Learning did not stand out as we first expected it to be. This was due to the complexity of narrow paths, which was not suitable for the robots to learn, therefore successful training required extensive amount of time. Rather, Token Passing algorithms easily found out the path to achieve pickup and delivery tasks, avoid collision based on hard-coded conditions on waiting and taking detours

This project honed my problem-specification skills, allowing me to translate complex problem scenarios into actionable simulation steps and conditions.

Adapting various algorithms to a unified testing environment presented significant initial challenges, yet through persistent experimentation, I gained a deep understanding of the mathematical principles driving algorithm performance under specific conditions.

Top

Top

created with

Best Free Website Builder .